As part of the development of JFrog Xray’s new Secrets Detection feature, we wanted to test our detection capabilities on as much real world data as possible, both to make sure we eliminate false positives and to catch any errant bugs in our code.

As we continued testing, we discovered there were a lot more identified active access tokens than we expected. We broadened our tests to full-fledged research, to understand where these tokens are coming from, to assess the viability of using them, and to be able to privately disclose them to their owners. In this blog post we’ll present our research findings and share best practices for avoiding the exact issues that led to the exposure of these access tokens.

Access tokens – what are they all about?

Cloud services have become synonymous with modern computing. It’s hard to imagine running any sort of scalable workload without relying on them. The benefits of using these services come with the risk of delegating our data to foreign machines and the responsibility of managing the access tokens that provide access to our data and services. Exposure of these access tokens may lead to dire consequences. A recent example was the largest data breach in history, which exposed one billion records containing PII (personally identifiable information) due to a leaked access token.

Unlike the presence of a code vulnerability, a leaked access token usually means the immediate “game over” for the security team, since using a leaked access token is trivial and, in many cases, negates all investments into security mitigations. It doesn’t matter how sophisticated the lock on the vault is if the combination is written on the door.

Cloud services intentionally add an identifier to their access tokens so that their services may perform a quick validity check of the token. This has the side effect of making the detection of these tokens extremely easy, even when scanning very large amounts of unorganized data.

|

Platform |

Example token |

| AWS | AKIAIOSFODNN7EXAMPLE |

| GitHub | gho_16C7e42F292c6912E7710c838347Ae178B4a |

| GitLab | gplat-234hcand9q289rba89dghqa892agbd89arg2854 |

| npm | npm_1234567890abcdefgh |

| Slack | xoxp-123234234235-123234234235-123234234235-adedce74748c3844747aed48499bb |

—

Which open-source repositories did we scan?

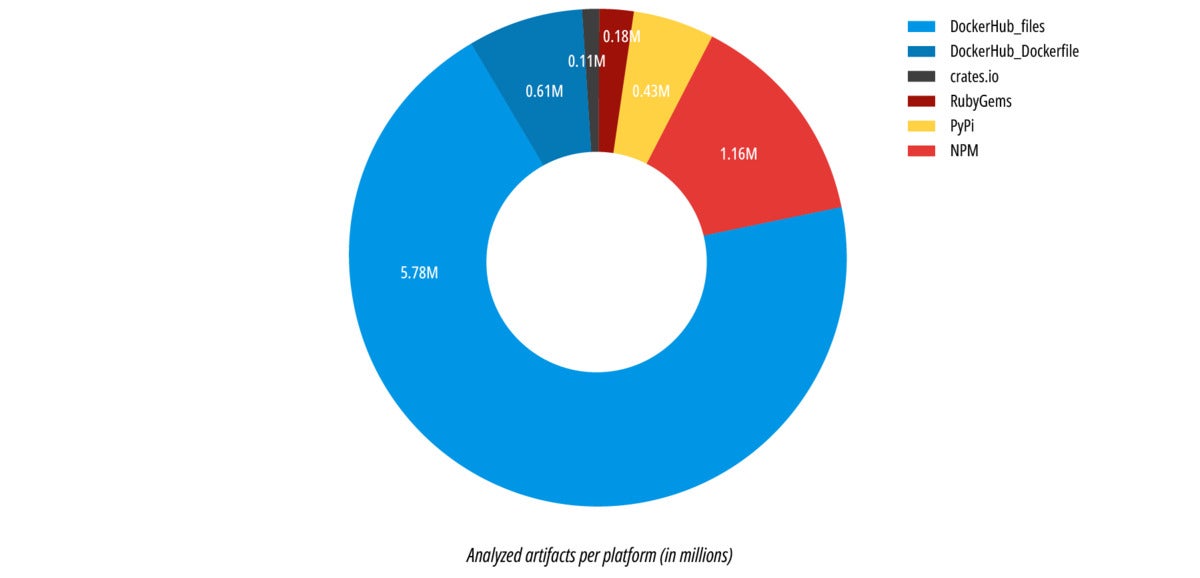

We scanned artifacts in the most common open-source software registries: npm, PyPI, RubyGems, crates.io, and DockerHub (both Dockerfiles and small Docker layers). All in all, we scanned more than 8 million artifacts.

In each artifact, we used Secrets Detection to find tokens that can be easily verified. As part of our research, we made a minimal request for each of the found tokens to:

- Check if the token is still active (wasn’t revoked or publicly unavailable for any reason).

- Understand the token’s permissions.

- Understand the token’s owner (whenever possible) so we could disclose the issue privately to them.

For npm and PyPI, we also scanned multiple versions of the same package, to try and find tokens that were once available but removed in a later version.

JFrog

JFrog‘Active’ vs. ‘inactive’ tokens

As mentioned above, each token that was statically detected was also run through a dynamic verification. This means, for example, trying to access an API that doesn’t do anything (no-op) on the relevant service that the token belongs to, just to see that the token is “available for use.” A token that passed this test (“active” token) is available for attackers to use without any further constraints.

We will refer to the dynamically verified tokens as “active” tokens and the tokens that failed dynamic verification as “inactive” tokens. Note that there might be many reasons that a token would show up as “inactive.” For example:

- The token was revoked.

- The token is valid, but has additional constraints to using it (e.g., it must be used from a specific source IP range).

- The token itself is not really a token, but rather an expression that “looks like” a token (false positive).

Which repositories had the most leaked tokens?

The first question that we wanted to answer was, “Is there a specific platform where developers are most likely to leak tokens?”

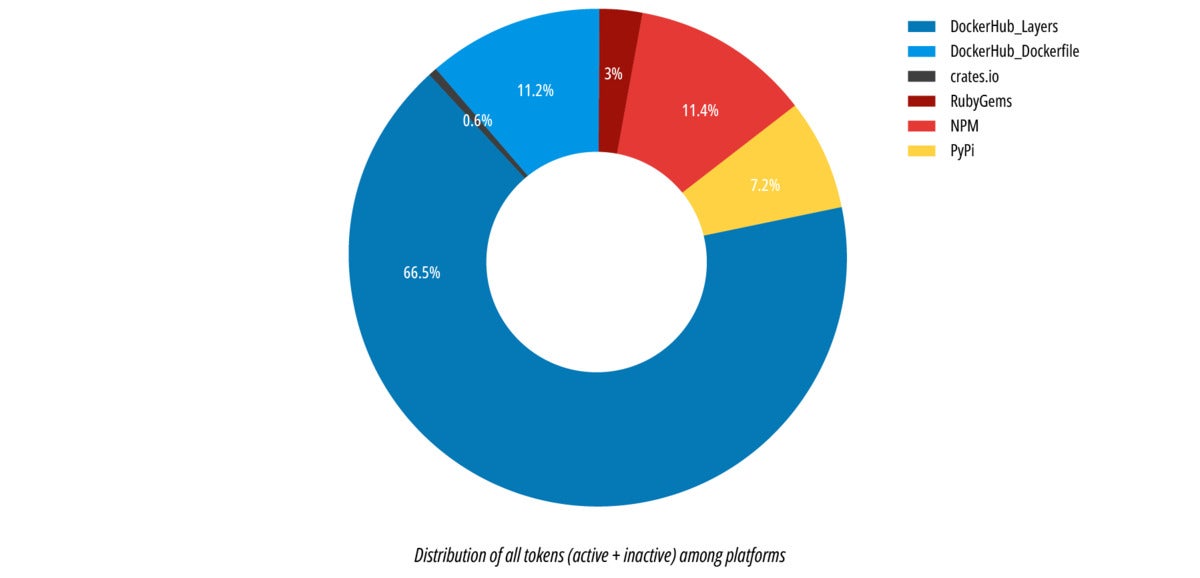

In terms of the sheer volume of leaked secrets, it seems that developers need to watch out about leaking secrets when building their Docker Images (see the “Examples” section below for guidance on this).

JFrog

JFrogWe hypothesize that the vast majority of Docker Hub leaks are caused by the closed nature of the platform. While other platforms allow developers to set a link to the source repository and get security feedback from the community, there is a higher price of entry in Docker Hub. Specifically, the researcher must pull the Docker image and explore it manually, possibly dealing with binaries and not just source code.

An additional problem with Docker Hub is that no contact information is publicly shown for each image, so even if a leaked secret is found by a white hat researcher it might not be trivial to report the issue to the image maintainer. As a result, we can observe images that retain exposed secrets or other types of security issues for years.

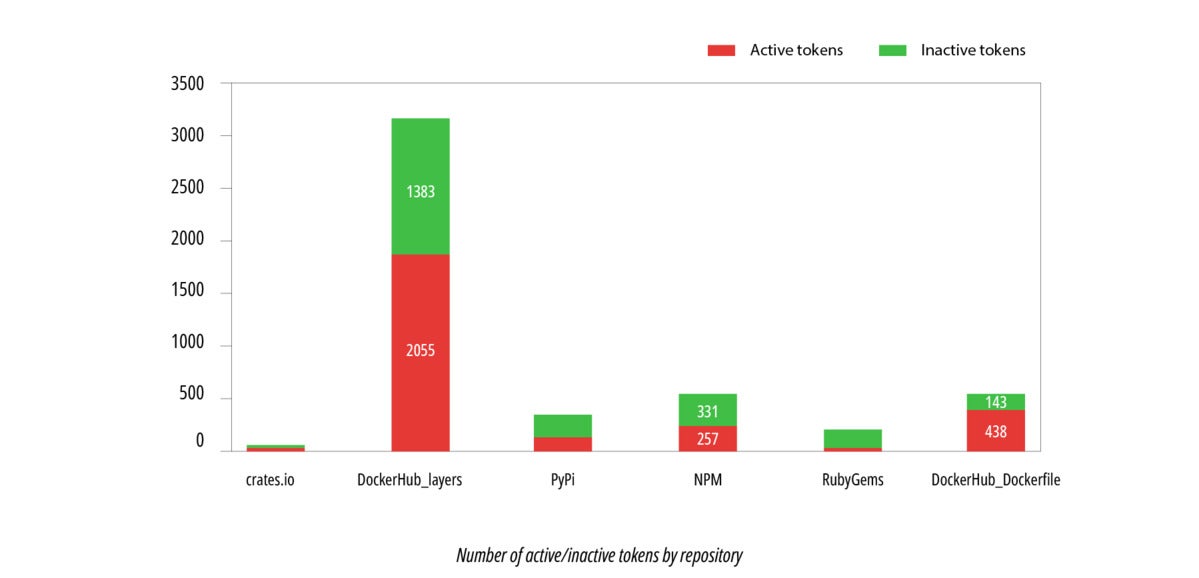

The following graph shows that tokens found in Docker Hub layers have a much higher chance of being active, compared to all other repositories.

JFrog

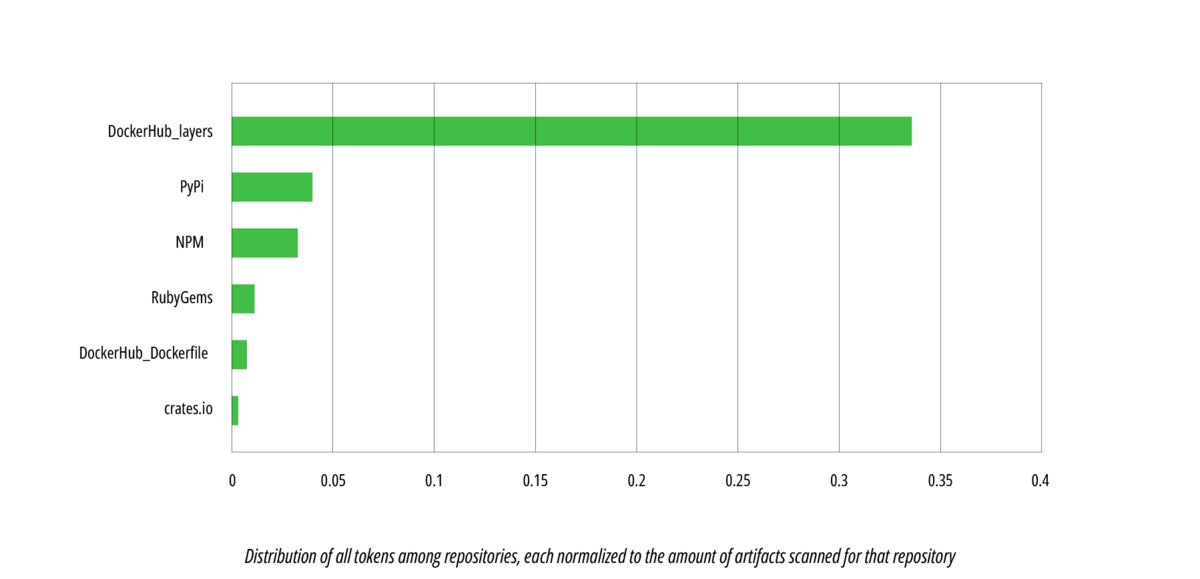

JFrogFinally, we can also look at the distribution of tokens normalized to the number of artifacts that were scanned for each platform.

JFrog

JFrogWhen ignoring the number of scanned artifacts for each platform and focusing on the relative number of leaked tokens, we can see that Docker Hub layers still provided the most tokens, but second place is now claimed by PyPI. (When looking at the absolute data, PyPI had the fourth most tokens leaked.)

Which token types were leaked the most?

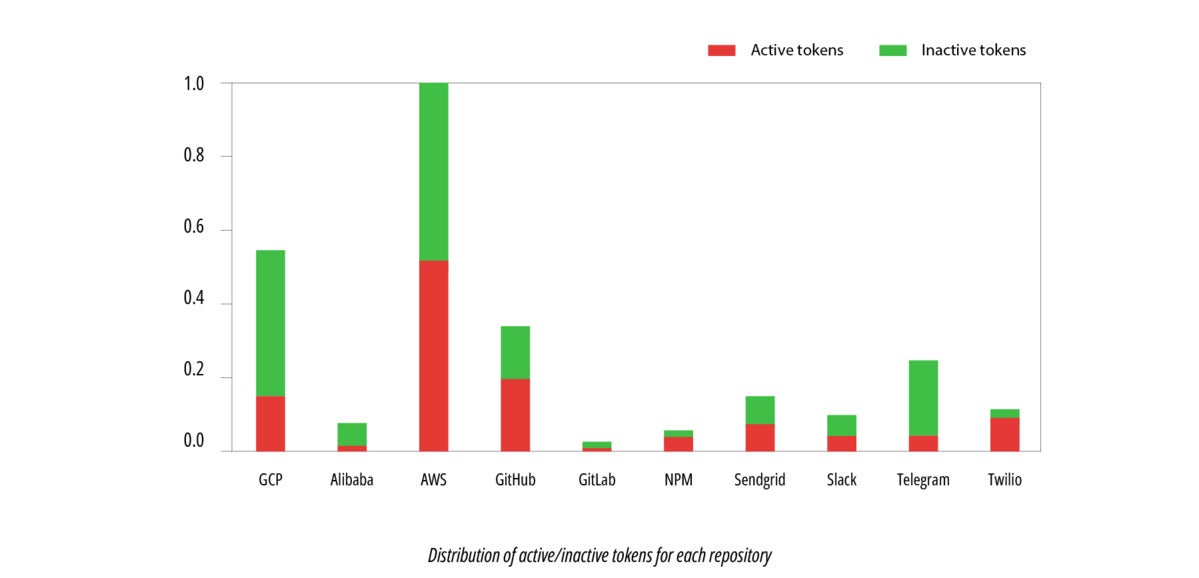

After scanning all token types that are supported by Secrets Detection and verifying the tokens dynamically, we tallied the results. The top 10 results are displayed in the chart below.

JFrog

JFrogWe can clearly see that Amazon Web Services, Google Cloud Platform, and Telegram API tokens are the most-leaked tokens (in that order). However, it seems that AWS developers are more vigilant about revoking unused tokens, since only ~47% of AWS tokens were found to be active. By contrast, GCP had an active token rate of ~73%.

Examples of leaked secrets in each repository

It is important to examine some real world examples from each repository in order to raise awareness to the potential places where tokens are leaked. In this section, we will focus on these examples, and in the next section we will share tips on how these examples should have been handled.

DockerHub - Docker layers

Inspecting the filenames that were present in a Docker layer and contained leaked credentials shows that the most common source of the leakage are Node.js applications that use the dotenv package to store credentials in environment variables. The second most common source was hardcoded AWS tokens.

The table below lists the most common filenames in Docker layers that contained a leaked token.

|

Filename |

# of instances with active leaked tokens |

| .env | 214 |

| ./aws/credentials | 111 |

| config.json | 56 |

| gc_api_file.json | 50 |

| main.py | 47 |

| key.json | 40 |

| config.py | 38 |

| credentials.json | 35 |

| bot.py | 35 |

—

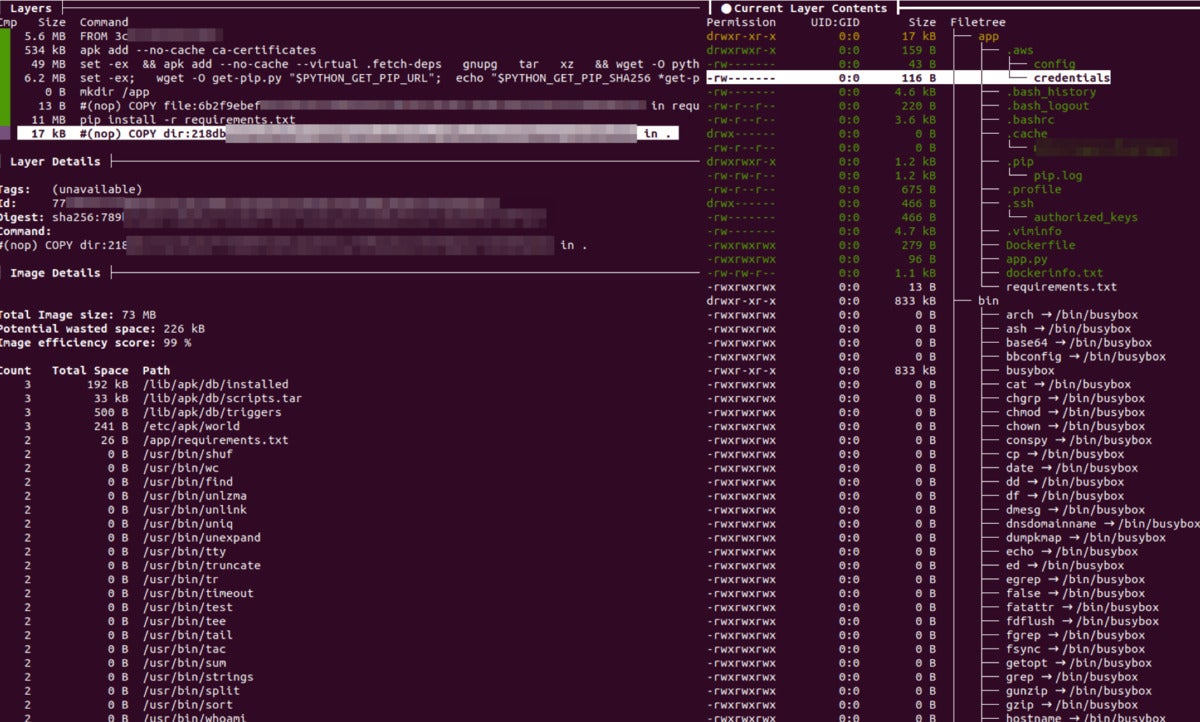

Docker layers can be inspected by pulling the image and running it. However, there are some cases where a secret might have been removed by an intermediate layer (via a “whiteout” file), and if so, the secret won’t show up when inspecting the final Docker image. It is possible to inspect each layer individually, using tools such as dive, and find the secret in the “removed” file. See the screenshot below.

JFrog

JFrog

Docker layer with credentials opened in the dive layer inspector.

Inspecting the contents of the “credentials” file reveals the leaked tokens.

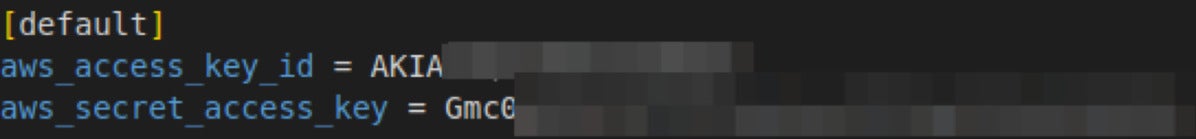

JFrog

JFrog

AWS credentials leaked via ./aws/credentials.

DockerHub - Dockerfiles

Docker Hub contained more than 80% of the leaked credentials in our research.

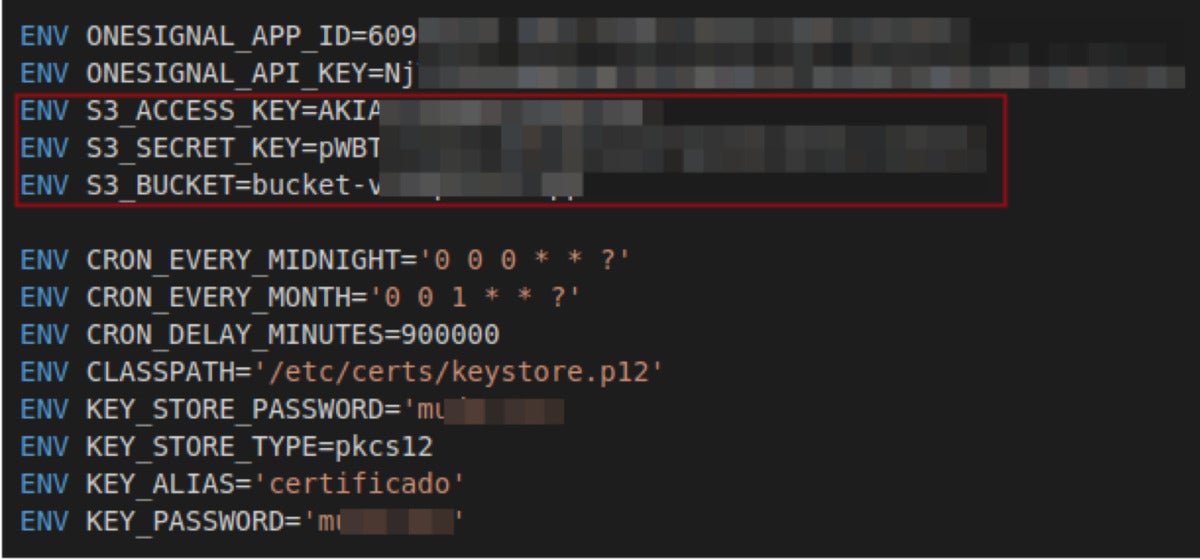

Developers usually use secrets in Dockerfiles to initialize environment variables and pass them to the application running in the container. After the image is published, these secrets become publicly leaked.

JFrog

JFrog

AWS credentials leaked through Dockerfile environment variables.

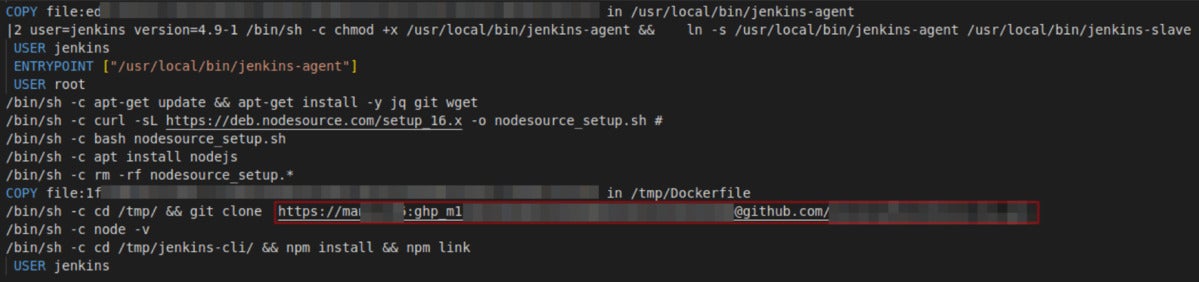

Another common option is the usage of secrets in Dockerfile commands that download the content required to set up the Docker application. The example below shows how a container uses an authentication secret to clone a repository into the container.

JFrog

JFrog

AWS credentials leaked through the Dockerfile via a git clone command.

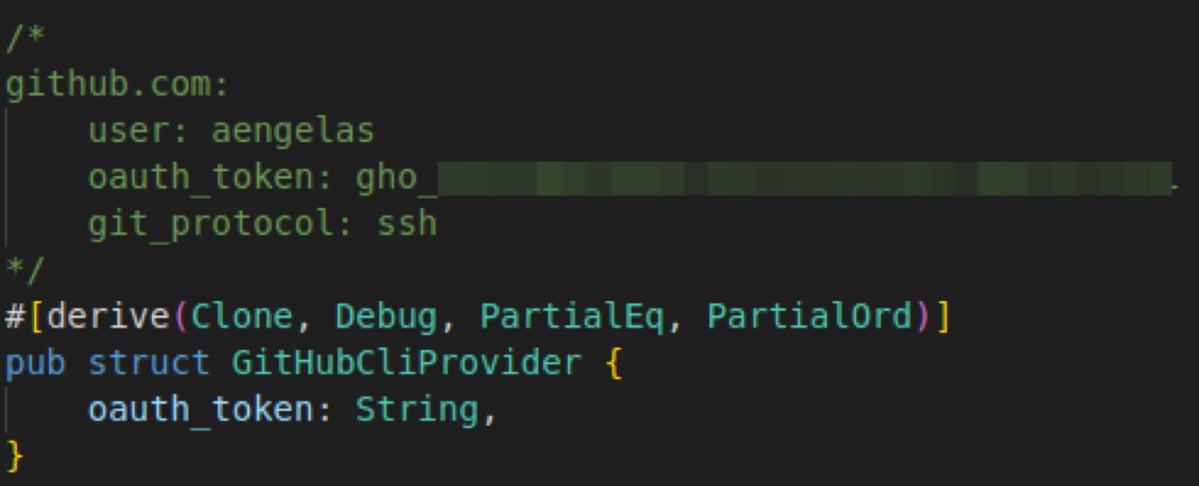

crates.io

With crates.io, the Rust package manager, we happily saw a different outcome than all other repositories. Although Xray detected nearly 700 packages that contain secrets, only one of these secrets showed up as active. Interestingly, this secret wasn’t even used in the code, but was found within a comment.

JFrog

JFrogPyPI

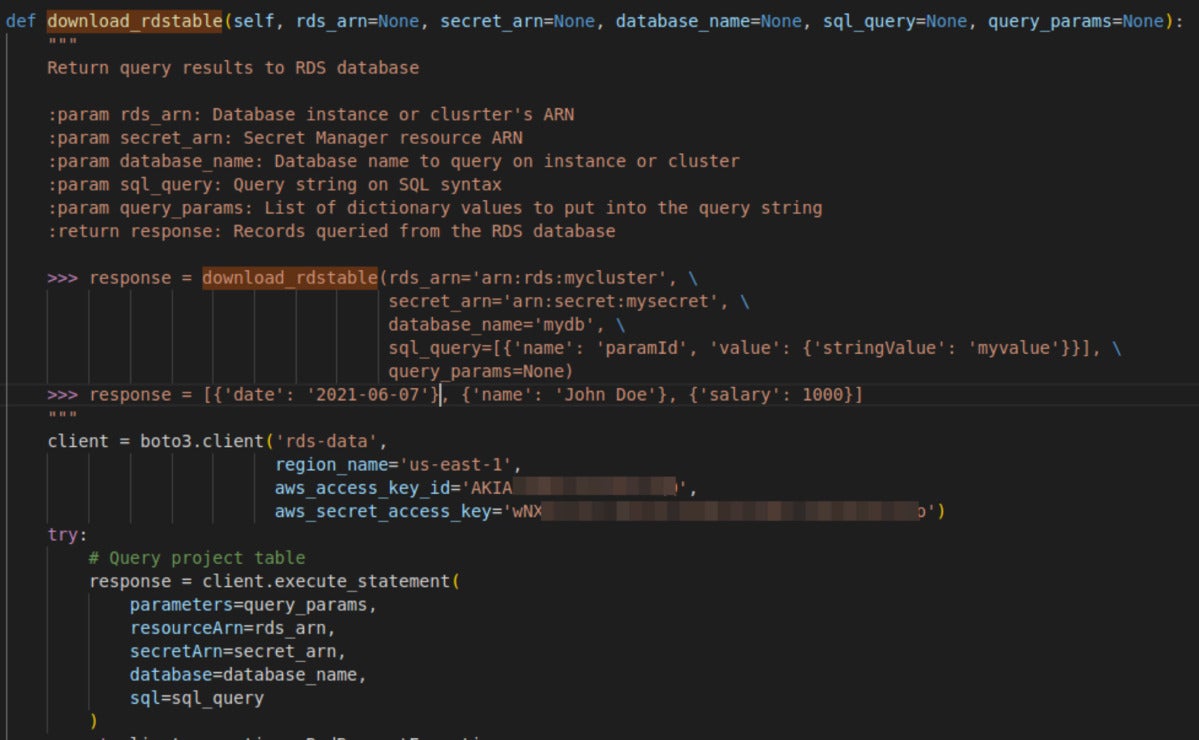

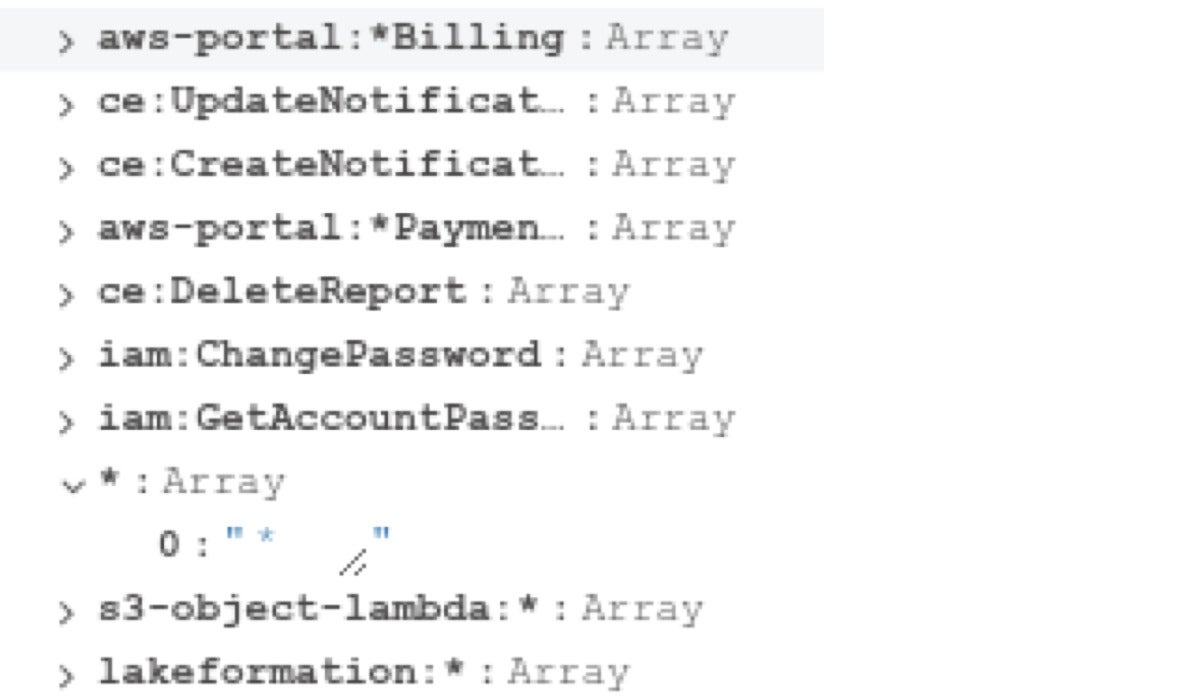

In our PyPI scans, most of the token leaks were found in actual Python code.

For example, one of the functions in an affected project contained an Amazon RDS (Relational Database Service) token. Storing a token like this may be fine, if the token only allows access for querying the example RDS database. However, when collecting permissions for the token, we discovered that the token gives access to the entire AWS account. (This token has been revoked following our disclosure to the project maintainers.)

JFrog

JFrog

AWS token leakage in the source code of a PyPI package.

JFrog

JFrog

Unintended full admin permissions (*/*) on an “example” Amazon RDS token.

npm

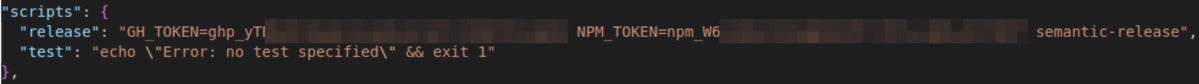

Other than hardcoded tokens in Node.js code, npm packages can have custom scripts defined in the scripts block of the package.json file. This allows running scripts defined by the package maintainer in response to certain triggers, such as the package being built, installed, etc.

A recurring mistake we saw was storing tokens in the scripts block during development, but then forgetting to remove the tokens when the package is released. In the example below we see leaked npm and GitHub tokens that are used by the build utility semantic-release.

JFrog

JFrog

npm token leakage in npm “scripts” block (package.json).

Usually, the dotenv package is supposed to solve this problem. It allows developers to create a local file called .env in the project’s root directory and use it to populate the environment variables in a test environment. Using this package in the correct manner solves the secret leak, but unfortunately, we found improper usage of the dotenv package to be one of the most common causes of secrets leakage in PyPI packages. Although the package documentation explicitly says not to commit the .env files to version control, we found many packages where the .env file was published to npm and contained secrets.

The dotenv documentation explicitly warns against publishing .env files:

No. We strongly recommend against committing your .env file to version control. It should only include environment-specific values such as database passwords or API keys. Your production database should have a different password than your development database.

RubyGems

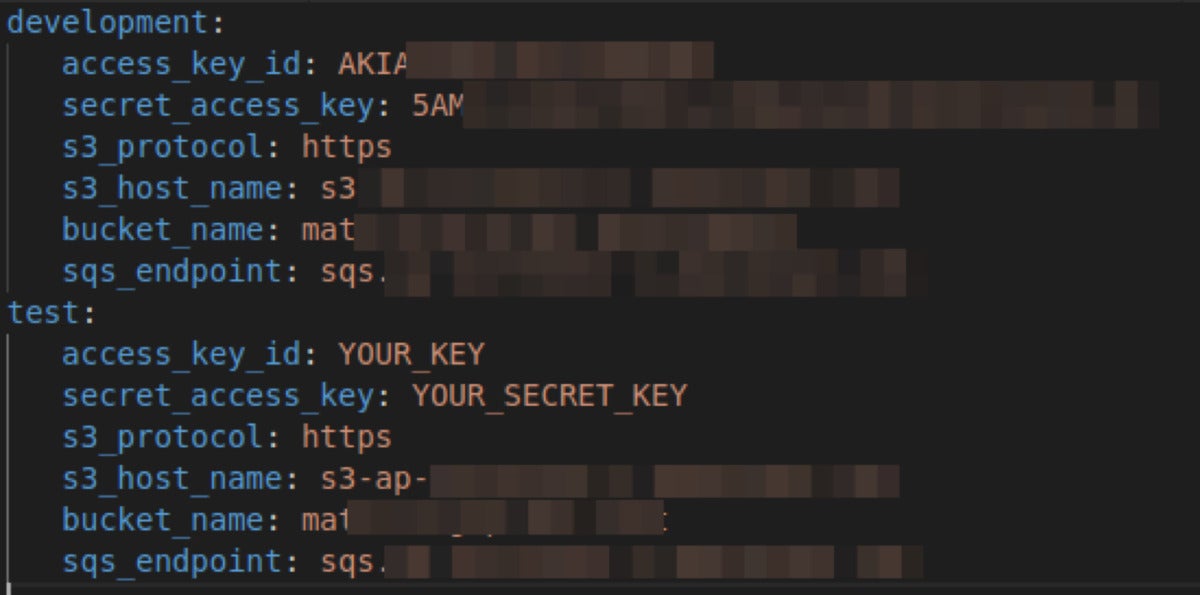

Going over the results for RubyGems packages, we saw no special outliers. The detected secrets were found either in Ruby code or in arbitrary configuration files inside the gem.

For example, here we can see an AWS configuration YAML that leaked sensitive tokens. The file is supposed to be a placeholder for AWS configuration, but the development section was altered with a live access/secret key.

JFrog

JFrog

AWS token leakage in spec/dummy/config/aws.yml.

The most common mistakes when storing tokens

After analyzing all the active credentials we’ve found, we can point to a number of common mistakes that developers should look out for, and we can share a few guidelines on how to store tokens in a safer way.

Mistake #1. Not using automation to check for secret exposures

There were plenty of cases where we found active secrets in unexpected places: code comments, documentation files, examples, or test cases. These places are very hard to check for manually in a consistent way. We suggest embedding a secrets scanner in your DevOps pipeline and alerting on leaks before publishing a new build.

There are many free, open-source tools that provide this kind of functionality. One of our OSS recommendations is TruffleHog, which supports a plethora of secrets and validates findings dynamically, reducing false positives.

For more sophisticated pipelines and broad integration support, we provide JFrog Xray.

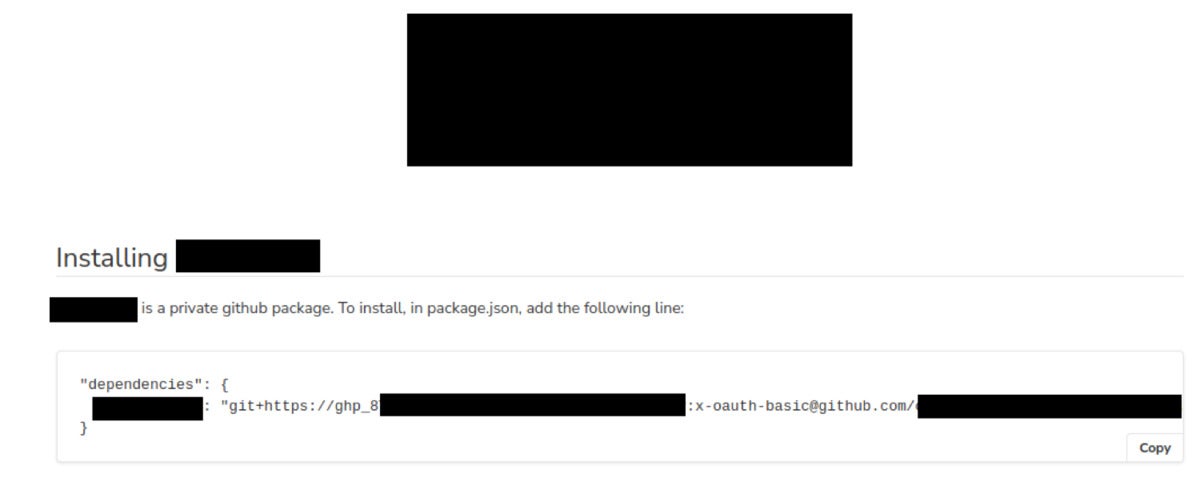

JFrog

JFrog

A GitHub token leaked in documentation, intended as read-only but in reality provided full edit permissions.